Climate science and meteorology, sensors, data analytics and machine learning with Dr Soumyabrata Dev, School of Computer Science.

Q. Tell us about your journey to becoming a researcher – what did you study and what interested you in particular about the kind of research you do?

A. I grew up overseas in the remote town of Nagaon, in the province of Assam situated in the north-eastern part of India. I graduated with a B.Tech from National Institute of Technology Silchar, India summa cum laude in 2010. Post graduation, I worked briefly with Ericsson India as a network engineer from 2010 to 2012. Then I went on to complete my Ph.D. from Nanyang Technological University (NTU) Singapore in 2017. My research interests involve computer vision and machine learning, and how these techniques can be applied for better understanding of the environment. It was during this period that I got fascinated by research and how these conventional techniques from computer science can be utilised to solve some of the pressing problems of the environment. I am fortunate to have collaborated with some of the most brilliant minds, and to research at the crossroads of computer science and environmental studies. The fact that our research assists in creating a sustainable living for everyone drives me to continue pushing the boundaries of research, every day of my job.

Q. You work both on sensors and data analytics, how do these two interests work together?

A. Our research group, THEIA: THE visIon and Analytics lab, based at the School of Computer Science, research broadly in the area of computer vision, image processing, and remote sensing. We use techniques from statistical machine learning and deep learning, to solve interdisciplinary computational problems in various fields of earth observations, atmospheric research, and solar and renewable energy. We use a variety of sensors in order to continually sense the various atmospheric events. These sensors include satellite observations, radiosonde (popularly known as weather balloon) data, ground-based weather stations, and whole sky cameras. The use of a plethora of these sensors assists us in recording the atmospheric events at diverse spatial scales and temporal scales. However, the data obtained from these sensors are noisy, often suffer from missing values, and require a huge amount of geo-calibration for subsequent post processing. During this stage, conventional techniques from mathematics, statistics and computer science assist us in generating clean and calibrated data. Therefore, hardware sensors and data analytics work closely together in order to provide meaningful insights to the chosen problem statement.

“My research interests in the theme of atmospheric science strongly align with UN Sustainable Development Goals: Goal 7: affordable and clean energy, and Goal 13: climate action.”

– Dr Soumyabrata Dev

Q. How do your technical interests connect to environmental and sustainability concerns?

My research interests include image processing, machine learning, and remote sensing. My research interests in the theme of atmospheric science strongly align with UN Sustainable Development Goals: Goal 7: affordable and clean energy, and Goal 13: climate action. One of our research goals is to effectively predict the amount of solar energy incident on a particular location using historical ground-based sensor data. This greatly assists the PV engineers to transition from conventional energy sources to solar and renewable energy, leading the consumers to lead a sustainable lifestyle.

One of the other technical interests of our group resides in working on the multi-modal sensor data, and creating a platform for easy access to the climate researchers. We aim to make knowledge graph technologies more accessible to climate and energy researchers. A large number of today’s climate data centers present their collected data in the form of raw tables (e.g. RDB, CSV, JSON): KNMI Climate Explorer, NOAA datasets. Recently, one of the popular solutions that is greatly explored is employing an ontology or a knowledge graph, that offers the expressivity and flexibility to easily extend to various interoperable domains. They greatly assist in defining the semantic model of the underlying data combined with domain knowledge.

Our project, Link Climate, is created to provide Ireland’s and England’s NOAA climate daily summaries datasets with a complemented data navigation capability in the form of a knowledge graph. It is composed of a sparql server with data periodically updated from NOAA Climate Data Online and a dereferencing engine powered by LodView. Thanks to a collaborative research grant from UCD Earth Institute, myself and Fabrizio Orlandi, ADAPT Centre, Trinity College Dublin, and Isabella Gollini, School of Mathematics and Statistics, UCD, are now exploring knowledge graph technologies to integrate atmospheric pollutant data to our database. Such systematic data integration technologies assist us to solve the pressing problems of climate change and extreme weather events.

Q. Can you talk about the use of ground sensors in your work, for both cloud and solar investigation? What are the major technical advances in this field recently and the ones you are most interested in?

A: Traditionally, measurements from satellites were mostly used while understanding the events in the atmosphere. They offer advantages as most of these satellite measurements are available for all locations of the earth’s surface. They assist us in regular monitoring of the events above the earth’s surface.

Say, for example, let us understand this from the process of rainfall. Understanding the occurrence of rainfall at a particular location involves measuring the amount of humidity in the atmosphere. Satellite instruments offer us great help in this regard. Satellite precipitation sensors work by remotely recording electromagnetic spectrum, sometimes using various active sensors that send out signals and detect its impact on the atmosphere. There are mainly two types of satellite sensors – thermal infrared (IR) and microwave sensors. The IR sensors have low skill at short time and space scales, however geosynchronous satellites provide more frequent time scales. They work best in cases of intense convection. However, microwave sensors have greater skill in shorter time and space scales than IR but they are available only in low earth orbits. There are intervals of several hours to days in measuring precipitation because of the inability of a single satellite to capture precipitation cycles. Microwave sensors are used by satellite constellations such as the Tropical Rainfall Measuring Mission (TRMM) and the Global Precipitation Measurement (GPM) to estimate precipitation.

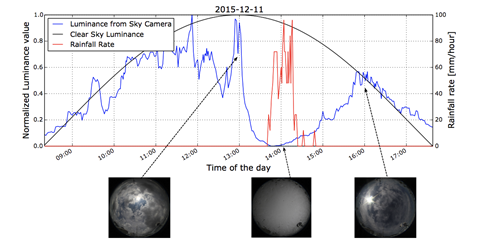

Ground-based sensors offer us fantastic alternatives, as they can collect localised information at high temporal resolutions and high spatial resolutions. These ground-based sensors include rain gauges, weather stations, and quite popularly, ground-based whole sky imagers. Our research group has extensive experience in designing and prototyping such low-cost ground based sky cameras. To come back to our example of estimating precipitation, ground-based sky cameras provide us localised cloud information. We can track the movement of clouds, and the change in the color of the clouds to understand the occurrence of imminent rainfall at a particular location. We illustrate this using the following diagram. Figure 1 illustrates this, where we show that the luminance of the captured image can be a strong indicator for the onset of a rainfall event. Such localised analysis of cloud formation and subsequent rainfall is only possible via such ground-based sky cameras.

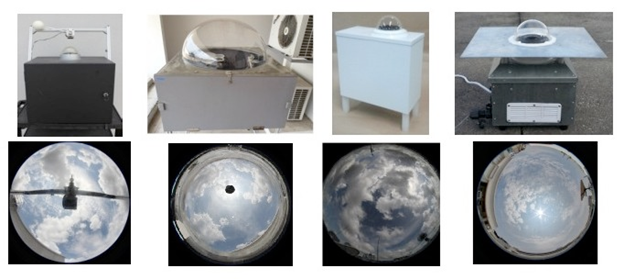

Over these years in our research lab, we designed and built different versions of ground-based sky cameras, which are efficient, low-cost, and highly flexible. Our custom-built WSIs are equipped with a high-resolution fish-eye lens that captures the images of the sky scene at user-defined intervals of time. Our imagers are modular with easily configurable hardware and software, offer a high image resolution (5184X3456 pixels), and cost less than US 3K to build. Furthermore, they give us the flexibility to explore the use of High Dynamic Range Imaging (HDRI) techniques to capture the wide range of luminosity of the sky scene. Figure 2 shows the various versions of our sky cameras along with their captured images.

Thanks to a research grant from Google, I am currently working other UCD Earth Institute members (Michela Bertolotto and Gavin McArdle, all from the School of Computer Science) on designing an extremely low-cost and robust sky camera for continuous monitoring of the atmospheric events. These low-cost ground-based cameras can be installed at multiple locations of our case study location for the purpose of stereo reconstruction of the cloud-base height.

Q. What kinds of impact have these technologies have on things like forecasting and solar energy management?

Our research group has extensive experience in designing low-cost sensors and architecturing state-of-the-art deep neural networks for a better understanding of the atmosphere. We use a multi-modal data integration approach, in using various sensors (camera images + weather station recordings) to provide useful insights about solar energy. We use point observation weather-station data for accurate solar energy estimation and forecasting. We design LSTM-based neural networks for short-term and long-term forecasting of received solar energy at a particular location. This is useful in the field of photovoltaic (PV) generation and integration.

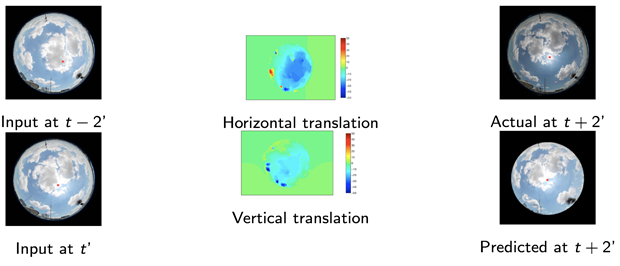

In addition to using weather data, we also derive solar analytics information from images, captured via ground-based sky cameras. Unlike solar pyranometers and other regular meteorological sensors, ground-based sky images have additional information about the continuous evolution of clouds over time. We use these cloud/sky images to propose a solar radiation estimation model that can accurately capture the short-term fluctuations of solar irradiance. We also use these sequences of images to estimate cloud motion fields. These motion fields are derived from optical flow formulation of two successive image frames (cf. Figure 3). We compute the horizontal and vertical translation of pixels, and thereby predict future locations of clouds with a lead time of a few minutes.

Q: What kinds of future applications can you foresee in terms of networked sensors and machine learning, particularly in terms of addressing climate and environmental problems?

A: My previous employer, Ericsson, predicts that there will be a massive growth in the number of networked devices and IoT sensors. The number of IoT connected devices are expected to touch 330 million by the end of 2021. Till 2026, these IoT technologies will constitute 46 percent of all cellular IoT connections. I believe that such rapid growth and deployment of networked sensors will greatly accelerate the research and development in the areas of environmental monitoring. This will assist us to constantly monitor the atmospheric events and archive high-quality data with excellent spatial scales and high temporal resolutions.

With the recent advancement in computing powers in HPC clusters, it is now possible to perform extensive statistical analysis on the environmental big data. The state-of-the-art deep neural networks can be used for several tasks, including estimation of weather variables, prediction of solar energy, and amongst others. Furthermore, continued research in these areas will inspire and raise the awareness of climate change amongst the general public. Also, such research will create major shifts in public policy and individual behavior regarding energy, sustainable living, and more. Through my research, I definitely envisage such a sustainable living future.